Mode Connectivity¶

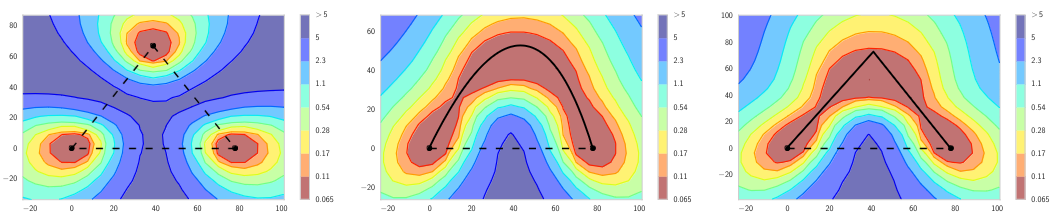

The loss landscapes of deep neural networks are highly complex and the geometric properties of them are underexplored. Mode connectivity finds that there are high accuracy pathways that connect the optima found by training independent models. The pathways between these modes can be approximated with simple curves. These paths challenge the prevailing notion of isolated local optima in high-dimensional loss landscapes.

Key Ideas¶

Let and be the weights for two independently trained networks minimized by some loss . Let be a continuous piecewise smooth parametric curve with parameters that correspond to the network weights and . The high accuracy pathway is described by the set of curve parameters that minimize the expectation of the loss with respect to a uniform distribution on the curve.

Many parameterizations are possible with the polygonal chain and the bezier curve being used as an example in the paper. A quadratic Bezier curve provides a natural and smooth parameterization.

The parameters of the curve are optimized with a standard gradient descent based approach where is sampled from the uniform distribution at each itearation and steps are taken with respect to the loss .

These high accuracy pathways can be leveraged to construct low-cost ensembles via a snapshot ensemble style algorithm. Cyclic learning rate schedules are used where large learning rates encourage movement in weight space while the small rates promote convergence to accurate local minima. The learning rate schedule is a shifted annealed cosine where is the initial learning rate, is the iteration number, is the total number of iterations, and is the number of cycles.

The minima found through this procedure are then used as endpoints for the curve fitting algorithm and all the weights found along the curve can be used to form independent ensemble members. This approach enables much larger ensembles of accurate members without needing to train for additional epochs.