Dropout¶

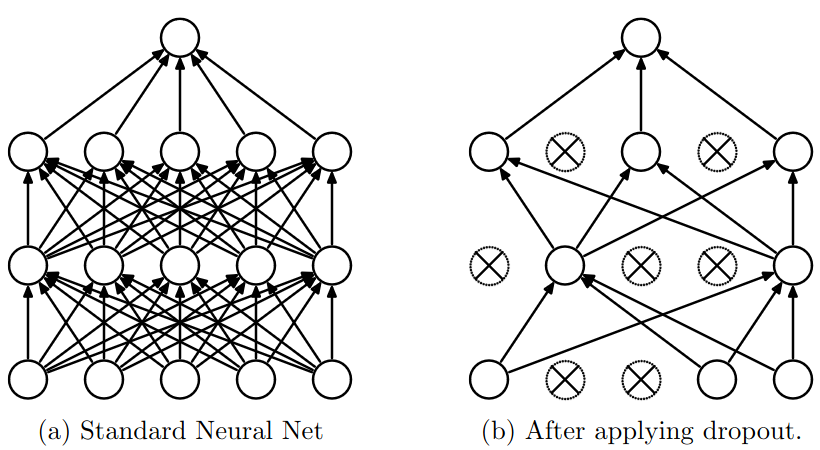

Dropout is a classic regularization technique where random neurons are masked for every forward pass through the network. This is an effective and popular method for reducing overfitting as dropping random neurons and their associated connections can help to reduce the co-adaptation of weights. This effectively trains an exponential number of sparse networks which are implicitly ensembled together during inference when dropout is turned off.